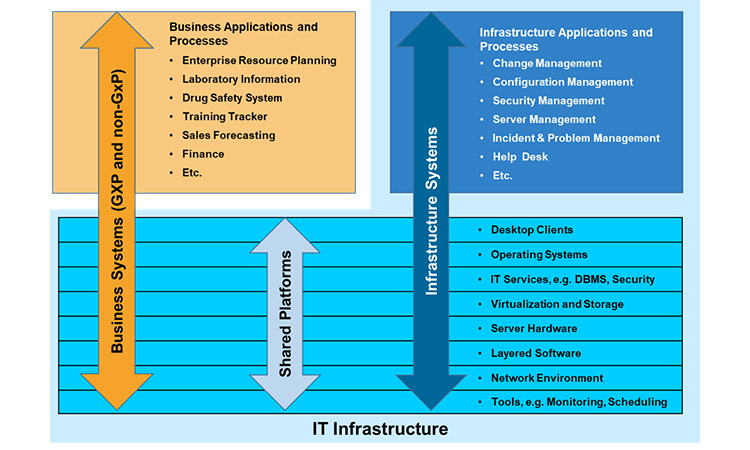

IT Infrastructure in the Current Life Science Company Environment

While the expectations for what IT Infrastructure is supposed to do, namely provide a reliably stable, secure and controlled platform for business applications, have not changed at all from those in 2007 when the first edition of GAMP 5®, the nature of the infrastructure and the manner in which it is managed has undergone two principal changes. This post examines these changes and makes the argument that all is not as different as it may appear.

Change Number 1: IT Infrastructure as a Commodity

As long as two decades ago many large life science companies started thinking along the lines that IT service would become a commodity, much like electric power. The initial forays into this mindset did not go all the way to this vision, but instead many companies outsourced the management of their own data centers and networks. They still owned the hardware and had input into the processes to manage it, but no longer employed the staff. Cloud services completed this move a few years later, where everything from hardware to software to staff and even processes were now managed by a third party. Initial uptake by life science companies was slow, and the first application of the cloud model was unsurprisingly driven by a business need. Large Phase 3 clinical studies collect a tremendous amount of data, and it requires truly intensive computing resources, ideally for a relatively short time, to analyze and understand this data. Companies tried innovative but largely unsuccessful approaches like leveraging the power of all their idle PCs overnight, but the best answer lay in the cloud: Flexible resources to meet brief high demand, and metered costs.

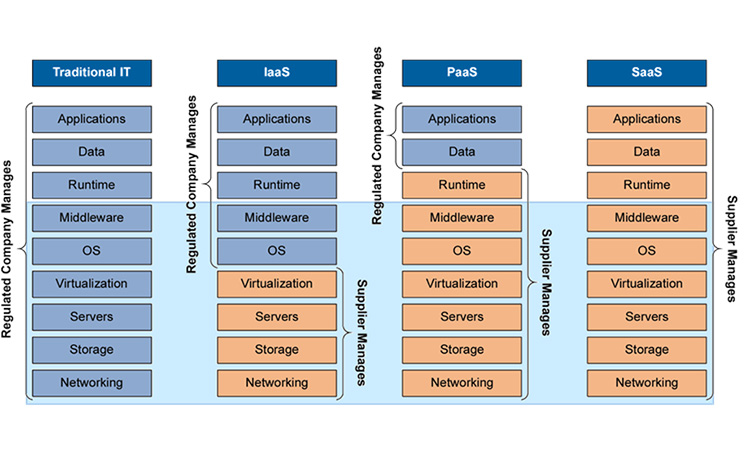

Still, uptake in other GxP areas was slow. Companies did not know how regulators would react to the running of applications critical to patient safety, e.g. a LIMS that manages release decisions, in the cloud, where they could not directly demonstrate control of the infrastructure. Instead, they dipped their figurative toes in the water, first leveraging Infrastructure as a Service (the second column in Figure 2), often doing little more than buying disk space. Companies fretted about the expense of setting up private clouds or engaging boutique cloud suppliers who only served life science businesses.

The difficulty with cloud suppliers therefore lay in the fact that, with very few exceptions, they are not GxP-regulated and do not particularly care about it. This however, does not mean that they are universally unsuited to host GxP applications. To the contrary, managing IT services is their core competency, and they are likely to be more expert at it than most life science companies. The central issue is ensuring that they have an adequate state of control over their infrastructure. This can be determined via an effective supplier evaluations process, a robust set of contractual agreements on service levels and quality, and monitoring.

Such supplier evaluations1 are likely to find that cloud suppliers have superior processes, because they do better continuous monitoring and have robust automation and processes in place. This is not to say that they cannot or will not have problems: they are dependent on a constant electric supply and are subject to extreme weather like everyone else. Chances are, however, that their business continuity and disaster recovery practices are well designed and effective. All of this must of course be verified as part of supplier evaluation, and the criticality of applications dependent on cloud resources must be clearly stated and agreed in a contractual document.

One of the other somewhat specious concerns that plagued those wanting to take advantage of the economic benefits of the cloud related concern over what else is co-located on the hardware. In reality, almost everything at cloud supplier runs in virtual environments, which are logically isolated from everything they are not specifically supposed to communicate with. This is just as secure as being on separate hardware. As a result it doesn’t matter if a pharma application is running on the same server as an automobile manufacturer and an on-line gaming company; they cannot interact, and they cannot “steal” computing cycles from one another. In fact, that may only be co-resident today, tomorrow one or more of those virtual machines may move to another hardware server as part of load balancing. Similarly, data cannot be “contaminated” by sharing a storage array with another client.

There may be some valid concerns over protection of intellectual property and data privacy, but those can generally be managed through extensive security controls including encryption. For truly sensitive data, the encryption keys can be managed by the life science company, so even if a cloud DBA must work directly with data, it will be unreadable.

Summing up, the following can be said about the use of cloud resources:

- In general, infrastructure controls at cloud suppliers will generally be better than those applied by a life science company

- There is no real reason for a life science company not to leverage cloud resources if it makes business sense

- It is incumbent upon the life science company to evaluate the following2 :

- Undertake an effective supplier evaluation to verify that they are satisfied the controls ensure confidentiality, integrity and availability of GxP applications and data

- Ensure adequate disaster recovery and / or business continuity provisions are in place

- Ensure that required confidentiality of data is managed through information security provisions, including encryption. Compliance with a standard such as ISO 27001 is a good start.

- Ensure there is a viable disengagement plan should the relationship need to be severed

- Ensure that issues critical to the life science company are enshrined in contractual agreements like a Quality Agreement and/or a Service Level Agreement.

Change Number 2: Embracing Tools and Automation

It stands to reason that as IT services are commoditized, both life science companies and other users of infrastructure (like cloud suppliers) look for solutions that make infrastructure deployment and management easier and more efficient. The nature of IT infrastructure involves managing large quantities of identical or similar tasks and components. For example:

- Backup processes are very similar for all data and programs, generally differing only in the location of the data to be backed up and where it will be stored, the frequency of the backup process (both incremental and full), and possibly the length of time for retention of the backup copy.

- Servers can be built to a common standard before they are further modified to run specific applications.

- Network switches are essentially identical and are configured via standard processes when installed.

Processes such as this readily lend themselves to automation. Consider the server build process: efficient companies, both life science and IT service suppliers, use automated scripts to load required operating systems and layered software in order to produce partially or fully configured servers. These processes have been demonstrated to generate desired results, i.e. they are fit for purpose, and often include built-in error-checking and a review-by-exception verification. This means that they only require human intervention if a fault occurred in the build process. The result is a qualified server platform.

Other aspects of infrastructure management can be handled very efficiently and effectively using commercially available tools. While there are many such tools, only a few are described below:

- Network monitoring tools that automatically detect problems and route traffic around faulty nodes until they can be repaired.

- Capacity management monitoring tools that can detect and report when storage space or database table space are becoming problematic, enabling corrective action before undesired consequences occur.

- Scheduling tools that kick off backup or file transfers at low network demand times

- Security incidents, application errors, infrastructure errors can be automatically detected and reported

- Unauthorized configuration changes can be detected and reverted to an approved baseline

Such tools are considered GAMP® Category 1, Infrastructure Software. There is effectively no direct risk to patients from such tools, which are at a similar level to operating systems, database engines, and the like. As Category 1 software they are qualified, not validated. There should be evidence that they have been installed and configured properly, records are protected and they are managed to a state of control.

Conclusion

While the expectation for a controlled IT infrastructure hasn’t changed in the past fifteen years, the ways in which it is achieved have advanced. Effective supplier governance including ongoing evaluation and monitoring, and effective contracts and exit strategies are essential when delegating infrastructure management and control responsibilities to a third party. Whether managed internally or by a supplier, the use of automation and tools to improve the efficiency and effectiveness of processes is ubiquitous.

Finally, the risk/benefit calculation for the use of the type of tools and services discussed above is almost always overwhelmingly positive.

For further information on managing infrastructure see GAMP 5® Second Edition Appendix M11.