The Road to Explainable AI in GXP-Regulated Areas

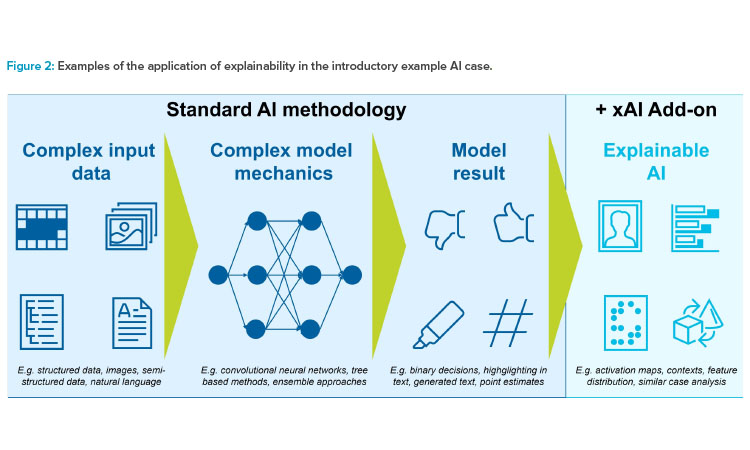

Recent advances in artificial intelligence (AI) have led to its widespread industrial adoption, with machine learning (ML) algorithms demonstrating advances in performance in a wide range of tasks. However, this comes with an ever-increasing complexity of the algorithms used, rendering such systems more difficult to explain.1 AI developments offer a solution: Explainable AI (xAI): i.e., additional modules on top of the AI core solution that are designed to explain the results to a human audience.

The audience of xAI may be a human operator during runtime, a quality assurance team (QA), or in an audit context. The use of xAI can help address the algorithmic complexity of AI, which may lead users and subject matter experts as well as stakeholders from QA functions to find it difficult to comprehend the solution’s decisions. This hinders acceptance and puts the potential benefits at high risk of never being deployed,2 which in turn causes impediments on innovative projects themselves.

Current regulatory initiatives also hint at interest in xAI, such as the European Union3, 4 and the US Food and Drug Administration guidance5 addressing concepts of trustworthy AI and human-machine interaction.

In this article, we elaborate on the benefits and requirements on xAI from a GxP point of view, along with the development and production process, with a focus on strategies to ensure that the intention of use is met and to manage risks for safety that arise from xAI itself. For effective operationalization, we suggest creating a roadmap to xAI in GxP regulated areas that integrates crucial stakeholders during the life cycle of the AI application regarding xAI design, development, and control.

We provide a structured, generalized way on how to design explainable AI solutions along the product life cycle to ensure trust and effectiveness in productive operation regulated by GxP requirements. We aim for GxP compliance of the explainable AI “add-on” itself, as it will fall under the same regulatory requirements when used in a safety-critical environment.

We continue our discussion from previous Pharmaceutical Engineering® articles about the AI maturity model6 and the AI governance and QA framework.7 Those articles introduced the concepts of autonomy and control in order to determine the target operating model of an AI solution and a development and QA governance framework, reflecting the evolutionary nature of data-driven solutions. Building on these concepts, we provide insights and guidance on how to effectively support the “Human-AI-Team,” a term of the FDA5 we believe describes very well the target operating model when interacting with AI: in a collaborative manner, complementing the strengths of cognitive as well as AI in GxP-related areas. We provide further details to the general approach to GxP-compliant AI as outlined in ISPE’s GAMP® 5 Guide, 2nd Edition,8 especially in Appendix D11.

The article discusses:

- The black-box dilemma of AI applications: An example case to demonstrate how the complex nature of AI applications may hinder trust and operational agility when not complemented by xAI

- Needs and responsibilities for xAI across corporate functions: Elaborating on how the core business functions may support overcoming the black-box dilemma

- The road to xAI along the product life cycle: The road to xAI in a GxP context, i.e., an organizational blueprint to integrate corporate functions with their needs and responsibilities

- Guidance to measure effectiveness of xAI: For the specific aspects of validation, we provide guidance on how to set up an xAI test plan

The Black-Box Dilemma

Imagine an AI-featured application that is clearly superior to existing rule-based computerized systems in a production process, e.g., applying a monitoring system to determine erroneous states of critical production steps. The introductory case is inspired by the study “Preselection of Separation Units and ML-supported Operation of an Extraction Column.”9 In our case, superiority entails an earlier detection of erroneous states and therefore, a measurable reduction in downtime, leading to a better adherence to quality expectations of our product. Another assumption in our case is that we were able to show this performance in a test or retrospect simulation setting by statistical measures, e.g., when comparing potential downtimes with and without our AI application in place.

Despite the quantitative outcomes as sketched above, we must think about the context of use and the stakeholders interacting with the solution. The stakeholders may deem the application as “black-box,” i.e., they might not be able to interpret its results, even if they acknowledge AI’s performance in a statistical sense. In more detail, we may face the following impediments that arise when typical stakeholders in GxP context are not able to link the application’s results with their respective mental models:

- Daily operation view: Users may not trust the solution’s indications for erroneous states. From a user’s point of view, this scenario is fully comprehensible since superior predictive power is only possible if formerly applied systems produced more false positives or false negatives than the AI alternative. Hence, notifications of the new solution will be surprising, especially when users lack understanding of why the notification has been raised. In another adverse scenario, users may blindly trust the AI solution, even for erroneous indications.

- Validation and QA view: The QA function must carefully monitor incidents raised by human operators or the automated quality control functions. In doing so, a conclusion must be reached whether the process is under sufficient control or whether further measures must be taken, yet with only the incident events as well as the complex input data and algorithm at hand, the clustering of information and the identification of root causes will remain difficult, requiring further ML engineer analysis. This itself can be seen as a case-to-case explainability analysis, yet in a less controlled and streamlined manner. It can also drive up costs.

- Product management view: Management will push to resolve interruptions in the production process as quickly and safely as possible, yet operating procedures may require deeper analysis, involving further parties and hindering the actual production process and the product release itself.

- Audit and regulatory view: Audit will require the process to be in full control with regard to safety and quality expectations. This must be demonstrated by the corporation that utilizes the AI solution, which is typically a QA team’s duty. However, within a black-box environment, analysis and decision-making processes might take longer and so the responsiveness to critical situations might be under question.

With existing processes still in the lead with respect to GxP compliance, the consequences mentioned here contribute to why some AI solutions remain in pilot phase, and their quantitatively shown superiority will remain unharvested. And even if such a system would be applied in production, controls and double-checks might dilute the benefits from an economic point of view, on top of regulatory risks during audit.

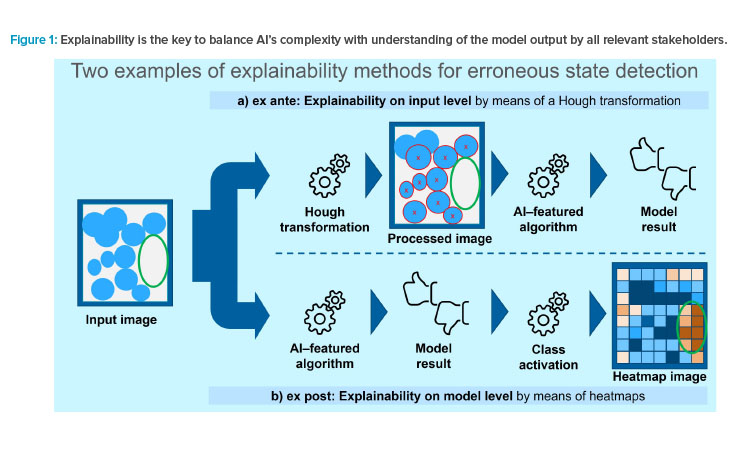

So how can we overcome this dilemma? Explainable AI aims to be an add-on to the actual model engine that is designed to show stakeholders how AI has come to its conclusion (see Figure 1). The black box will be opened to a level suitable for the audience, and along the way, the development process itself will benefit from these insights.

Continuing our introductory example on the identification of erroneous states, we present two xAI methods in Figure 2, representing ex ante explainability on the level of input data and ex post on the level of the model.9 The input image can be a) processed by means of a a feature extraction method (Hough Transformation, a robust method to identify shapes like circles and lines) and b) compared to heatmaps that illustrate the class activation of neural networks in classifying an erroneous state. These could then guide the human operators in their decision, and could be used in a retrospective QA exercise. Furthermore, these outputs can support communicating the functioning of the algorithm and how it is embedded in operational procedures in an audit situation.

Management needs to make educated decisions on whether the risks involved in productive operation are outmatched by the operative and patientcentric benefi ts, balancing the business and the product quality perspectives.

Needs and Responsibilities for xAI across Corporate Functions

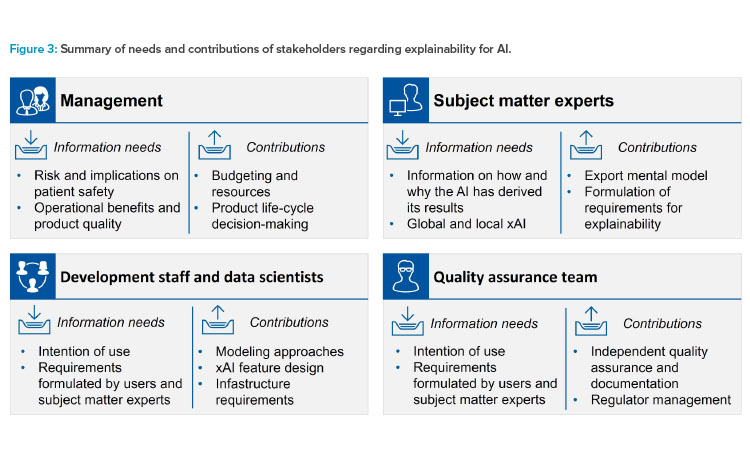

Driving a product through its life cycle in the GxP-regulated environment involves many stakeholders, each with their specific needs and responsibilities in unlocking the black-box nature of AI solutions (see Figure 3):

- Management needs to make educated decisions on whether the risks involved in productive operation are outmatched by the operative and patient-centric benefits, balancing the business and the product quality perspectives. To this end, the AI solution’s functioning and limits should be made transparent such that the actual decision to run the system in production can be made and further guidance for development can be issued. It is the project’s responsibility—involving both the development as well as the QA side—to provide this information in the level of detail suitable for management stakeholders.

- Subject matter experts (SMEs) represent the functional design of the solution. As such, they provide a “mental model” of the underlying functional mechanisms of the use case. They also curate the training and validation data that is used to train the model. Usually, they represent the user or even coincide with the consumers of the AI application’s services. Therefore, SMEs are one of the main addressees of the solution’s explainability features where we differentiated two needs:

- Explainability on the model level (global understanding): SMEs need information on the model’s overall behavior to match their mental model.

- Explainability on the data-point level (local understanding): In the Human-AI-Team, explainability on the data-point level enables the user to understand how the solution has come to its conclusion. Depending on the intention of use, this may be directly necessary in a situation where the ultimate decision remains with the users. In such a situation, the users would augment their expert knowledge to the AI’s result leveraging both strengths of the Human-AI-Team: Critical thinking and subject matter expertise as well as the capability to analyze large amounts of data. Furthermore, in automatic operating use cases, explainability on a single level is important to analyze sample or incident cases to understand potential areas of improvement. It is the SME’s responsibility to formulate clear expectations on the explainability capabilities as part of the intention of use and model design.

- Development staff and data scientists must fulfill the explainability requirements by suitable choices of technology and presentation that support the needs of the xAI’s audience. The same time, they may also gain a better understanding of the model to iterate to an optimal model choice. Decisions must be made on various levels:

- Solution’s modeling approach: When selecting AI modeling strategies, some models may be more open to explainability than others, typically at the cost of predictive power. Typically, regression- and tree-based models are much easier to understand than complex, deep-layered neural network approaches. These decisions should carefully reflect the needs to find an optimal compromise among various AI quality dimensions.7

- Explainability feature design: To fulfill the specific needs of the xAI’s audience, explainability features must be integrated into the solution so that the target audience can build on this presentation. The challenges in this regard range from suitable algorithmic strategies, potential performance optimization, and suitable representation of results.

- Infrastructure and computation power: xAI is generally resource-intense and has implications on the infrastructure required to support productive operation. In performing their task, the development team should keep an attitude of critical thinking: As more knowledge is accumulated during the development, explainability requirements may shift, offering even better approaches. Hence, continuous exchange with SMEs should ensure both that actual needs are met and learnings are quickly fed into the design process.

- QA team: The QA team is the other main addressee for explainability, so the team must evaluate whether the solution is fit for production as per requirements and quality expectations. This involves careful planning to measure the effectiveness of xAI. Typical approaches involve structured feedback on the analysis of example cases by SMEs, while the QA team must take care in designing the exercise in a way that a sufficient range of cases both in usual operation and edge cases are covered. In addition, the QA team must summarize the quality indications to management for decision-making processes. In addition, the QA function is in charge of demonstrating the control framework against auditors, where xAI will support the understanding and trust.

The Road to Explainable AI on the Product Life Cycle

The complex needs and responsibilities regarding explainability of an AI solution need to be structured in a framework to ensure that all required steps are performed along the development process. In the following overview, we propose a roadmap to orchestrate the stakeholders’ activities, supporting the effective use of AI in GxP-relevant processes (see Table 1).

| Business Function/ Development Step |

Management | Subject Matter Experts | Development Staff and Data Scientists |

Quality Assurance Team |

|---|---|---|---|---|

| Intention of use design | High-level guidance on the solution’s goal |

Learning target specification

|

Suggestions from technologically

|

Insights from similar projects

|

| Risk assessment | Guidance on acceptable risks | Explainability feature-driven risks: misguidance, target group adequateness, lacking level of detail, performance |

Data feasibility, quality, and robustness |

Support in deriving quality

|

| Model and explainability feature design |

Clarification

|

Model and explainability means

|

||

| Model and explainability feature implementation |

Feedback on usability |

AI solution implementation and

|

QA planning (strategy and documentation definition) |

|

| Initial QA assessment and quality gate |

Expert assessment | QA execution (testing, documentation, and reporting) |

||

| Productive operation and QA periodic review |

Life cycle decision-making | Feedback on solution quality | Development planning | QA periodic review |

Intention of Use Design

Explainability must be considered from initiation of the project. The guiding question is: Who shall act in which situation based on the results of the AI solution? In answering this question, the basis for the following steps regarding explainability design is set:

- Who (the addressee): Identifying the addressee, i.e., the user or stakeholder to whom the AI’s results should be explained. This may range from operators controlling the actual production process to subject matter experts and QA for validation purposes. It is crucial to understand the background and knowledge of the respective audience to provide the right level of detail for performing their tasks when interacting with the AI.

- Act (the action to be performed): Leveraging the AI maturity model,6 the target operating mode for the AI solution may range from full oversight to exception handling only. Understanding what the addressee must do is paramount to designing the optimal means of providing the required information; also, non-functional restrictions such as processing times must be considered.

- In which situation (the circumstances in which the explainability features must achieve their effect): Circumstances may range from an office situation to the actual production environment with its impacts on space, sight, heat, etc. A good understanding of the situation in which the addressee use xAI is required to design appropriate technical means.

- Based on the results of the AI solution: This part of the question reflects the output of the AI. The form and delivery of an explanation depends highly on the input and output structure.

In this early stage, all parties can contribute to the target operating model, either from the functional side (formulating expectations and requirements) or from the technological side (showcase possibilities that might be unthinkable from a SME’s point of view).

Risk Assessment

GxP is about ensuring an acceptable balance of risks to patient safety and their benefits. In that sense, explainability is one means to bridge the gap between what can be drawn from the data and what needs to be assessed by humans’ intelligence. However, it is a mistake to generally consider explainability as risk mitigation strategy, as xAI carries risks on its own:

- Misguidance and target group inadequateness: Even though explainability is designed to support the user in drawing the right conclusions from the AI solution’s output, xAI may suffer from the solutions’ limitations and inadequate quality of the data used. Furthermore, the output may be misinterpreted by the audience.

- Mismatch between the data used for the model result and the data used for explainability features: It is crucial that the same data and state of model is used to determine the actual model result and it’s explainability output presented to users. This could be provided by noting a checksum or a cryptographic proof on the data used, as well as strict tracing of the model versions.

- Lacking level of detail: Explainability cannot reveal the full mechanics of the model, which would be as complex as the model itself. Therefore, a decision on the level of abstraction must be made that determines the provided information. Whether this level of detail is sufficient should be carefully evaluated. Also, alternative means to perform the operators’ action should be provided for unforeseen cases.

- Performance: The xAI may be too slow to be operational in the context of the system, as additional functionality renders a higher burden on the infrastructure in which the solution is running. This aspect should be carefully evaluated in infrastructure planning.

By means of a risk-based approach, management must decide which risks are deemed bearable compared to the benefits to be expected from the use of AI, and where further investment is needed to reduce risks to an acceptable level. This should be codified by quality expectations that the AI solution including its explainability features have to fulfill to be considered safe for productive use.

During productive operation, data should be collected that allows the tracing of the AI solutions and its xAI.

Model and Explainability Feature Design

Once the intention of use, the addressees, and the acceptable risks have been identified, the solution should be designed in a way that it is able to fulfill its specific quality expectations. The impact of the steps on the modeling decision that precede this cannot be underestimated: When users are confronted with the solution’s results in every single case to build their decision on the AI output, a different modeling strategy may be pursued from a situation where the solution operates in a mode when only exceptions are handled by operators.

However, it is important to critically reflect the decisions made so far, as more understanding of the use case will be gained once the first models are evaluated. Prototyping strategies for early feedback and alignment reduce the acceptance risks further down the development process stream. In this process, documentation regarding the decisions made is important to justify the model and explainability mechanism selection in an audit context.

Model and Explainability Feature Implementation

xAI must adhere to the same software engineering standards as the AI solution itself and the more classical computerized systems with which the solution is integrated. This means that code quality standards, automated testing strategies, and qualification must be maintained, based on the intention of use, the risk assessment and requirements that have been identified in the first two steps.

Training, alignment, and refinement should be planned to reach the quality expectations, especially since the addressees of explainability features may be inexperienced with those mechanisms.

As the target solution including explainability features becomes clear, the QA team should plan on how to validate the effectiveness of these mechanics during validation planning.

Initial QA Assessment and Quality Gate

As for other aspects of the solution, the goal of QA is to generate evidence on whether quality expectations are met. Earlier in the article, we suggested the concept of five AI quality dimensions that provide a blueprint to structure these expectations in an AI context. Here, explainability is one crucial part of the “Use Test” dimension, i.e., the acceptance of the solution by its users.

The following aspects are important to generate sufficient evidence that quality expectations are met:

- As a quality gate to productive operation, the QA assessment must cover an appropriate range of use cases and collect users’ or SMEs’ feedback on implemented explainability features.

- It is important to cover a suitable range of input and output scenarios: For instance, in binary classifications, explainability should work both for positive as well as negative cases along suitable stratifications of the input data space.

- The range of users is important to consider, starting with the new joiner scenario to experienced staff. This variety helps in aggregating insights on the overall effectiveness of the xAI.

The QA team should draw a holistic conclusion based on generated evidence and prepare the product life-cycle decision making by higher level management by means of weaknesses identified in the Human-AI-Team with an indication, jointly with the SMEs, on the criticality and potential for risks to materialize as well as further suggestions for improvement of the solution and its explainability features, e.g., regarding presentation of results, extensions of existing functionality, or changes in limitations applied to productive operation.

As for other validation exercises, documentation of the QA exercise requires a traceable path starting with the requirements for intention of use, along the risk assessment to the functional design decisions and the tested evidence, including the data used for tests and validation. For the specific aspects of xAI, we deem the assertions of users in how far the xAI supported in matching their mental model with the AI’s results to be most important.

Productive Operation and Ongoing QA

During productive operation, data should be collected that allows the tracing of the AI solutions and its xAI:

- Input and output data: The version of the model, the input data known to that point in time, and the model results as well as the presentation to the user should be collected in a way to reproduce the situation for an ex-post investigation.

- If suitable in the use case, annotations by users to further qualify and show their interaction with the AI results should be kept. This should include both means of structured as well as free text input as well as images.

- Incidents in the context of explainability can be defined as cases in which the solution fails to provide suitable information to the user on how it reached its conclusion. In a GxP environment, a formal deviation management must be defined; i.e., it must be predefined under which circumstances a deviation record will be triggered. Furthermore, to allow for a targeted improvement of the AI and xAI tandem, the following cases should be considered:

- The AI solution’s result is acceptable, although the xAI fails to communicate this result: This is an incident attributable to the xAI and offers opportunities to improve on the presentation of the model’s result. Such an incident may stem from insufficient level or choice of detail or actual errors in the presentation. In particular, the former can be best understood in collaboration with users and their refined view on expectations of xAI presentation.

- The AI solution’s result is not acceptable, although the explainability feature provides suitable insights on why the AI has come to its conclusion: This can be seen as an incident attributable to the AI model and offers opportunities to improve the predictive power of the solution. A follow up would be to investigate the model mechanics in more depth given the input.

- The AI solution’s result is not acceptable, and the explainability features fail to communicate the result to the user: This incident should be attributed both to the AI model and to xAI and form the most critical part from an operational point as this hinders trust in the AI. Such cases should be thoroughly investigated and matched against the risk assessment’s acceptance of such failures.

In the statistical setting of applying AI, raising awareness of the limitations and maintaining critical thinking are still required to augment artificial intelligence as needed by cognitive intelligence: If the user keeps the AI’s limitations in mind and is provided with appropriate means to take over control, he can more easily trust the AI. Only based on this trust can constructive collaboration emerge.

This data will provide valuable insights on how to direct the solution through its life cycle, either from a product development point of view or as per corrective and preventive actions (CAPA) management. With regard to explainability, user feedback is the most important source to improve on the interaction with the AI. This should be classified by severity and qualified by observations or images to allow for an in-depth investigation.

Guidance to Measure xAI Effectiveness

The goal of xAI is to communicate the model’s results to the SMEs. The evaluation of its effectiveness should cover the following aspects:10

- Acceptance of the results: In many use cases, users are still in control of the ultimate decision, or can judge on cases in an ex-post manner for a representative sample for QA purposes. Here, acceptance of the results can be measured by means of “overrides,” i.e., cases in which the user opted for a different outcome than the algorithm. Based on this measure, the solution could be provided with or without xAI. Comparing these results, we can measure the effectiveness of the explainability feature.

- Efficiency: In many use cases, the xAI should support the joint decision-making of the Human-AI-Team. Hence, a performance benchmark can be drawn by comparison of average times to decision (each with a representative set of cases) if feasible: by classical means without an AI-featured solution; with the support of an AI-featured solution, but without explainability features; and with the support of an AI-featured solution and its explainability features in addition. We should expect that operating times decrease with the level of technology applied. In a sense, the time differences between with and without explainability features are the benefits of investment into an additional layer of technology to explain the AI’s results. Surveys may catch qualitative input on the capabilities and limitations of the explainability features (e.g., general presentation, level of detail, overall trust in the solution).

Conclusion

The Human-AI-Team5 can only work effectively by means of clear communication and understanding. Staff can draw the right conclusions, gain trust in the results, and take over control in case of statistical failures. The key to understanding AI is another layer of functional instruments: xAI. However, designing xAI will require compromises based on the intention of use and the risk assessment, considering the target audience of various business functions.

This takes AI one step further: Like humans providing an expert-based prediction along a narrative, xAI does so based on the input data and sharing insights into its model mechanics. With our roadmap to explainable AI at GxP, we show how to operationalize the analysis and decision making to arrive at a suitable setup tailored to the intention of use, with quality assured and therefore fit for production. Hence, the design process of xAI should begin at the very start of AI projects.

Continuous measurement of the effectiveness of the explainability features, as for every crucial gear in a GxP-governed process, ensures long-term usability and trust. Technological approaches have provided the capabilities to unbox the black-box nature of AI solutions. The next step is to integrate these instruments into the development and QA processes as another building block to grasp the potential of AI in life sciences.

About the Authors

Acknowledgements

The authors thank the ISPE GAMP D/A/CH Special Interest Group on AI Validation for their assistance in the preparation of this article, and Ingo Baumann, Rolf Blumenthal, Nico Erdmann, Ferdinand Graf, Bastian Kronenbitter, Albert Lochbronner, and Veit Mengling for their review.