Data Integrity in the Trenches: A Look into Quality Control Lab

Pharmaceutical Engineering magazine’s March–April 2016 Special Report1 highlighted the increasing importance of data integrity for companies throughout the global GMP-regulated industry. This is especially true during health authority inspections.2 , 3 , 4 Pharmaceuticals, biotech, and API manufacturers—as well as contract manufacturing organizations (CMOs) and contract laboratories—have been “in the trenches,” addressing improvements to strengthen data integrity and data management over the data life cycle.

- 1International Society for Pharmaceutical Engineering. “Special Report: Data Integrity.” Pharmaceutical Engineering 36, no. 2 (March–April 2016): 39–67.

- 2Unger, Barbara. “An Analysis of 2017 FDA Warning Letters on Data Integrity.” Pharmaceutical Online, 18 May 2018. https://www.pharmaceuticalonline.com/doc/an-analysis-of-fda-warning-letters-on-data-integrity-0003

- 3Unger Consulting. “CY2017 FDA Data Integrity / Data Governance Warning Letters.” 12 March 2018.

- 4Toscano, George. “Data Integrity: A Closer Look.” NSF International White Paper. 2018. http://www.nsf.org/newsroom_pdf/pb_data_integrity_closer_look.pdf

Much of the focus on data integrity (DI) by health authorities during GMP inspections has been related to quality control laboratory operations. In this article we will look at both the regulatory context and the industry response. Additionally, since the quality control laboratory analyst is a key GMP role that touches data integrity, we will take a deeper look at the challenges of sustaining data integrity in that environment. We believe that a holistic approach to data governance that includes people, processes, and technology will provide the road map to sustainable data integrity.

How Did We Get Here?

Data integrity is not a new requirement. It has been and continues to be an inherent aspect of global GMP regulations. An analysis of data integrity deficiencies in FDA Warning Letters issued from 2008 to 2017 revealed both an increase in numbers and global scope as well as consistency in the findings.2 , 3 When the period was expanded from 2005 to 2017, the top five types of deficiencies related to basic GMP records requirements were:4

- Missing or incomplete records

- Deficient system access controls (e.g., shared logins)

- Mishandled chromatography samples and data, including reprocessing, reintegration, and manual integration without proper controls

- Deleted or destroyed original records

- Audit trail deficiencies

Guidance

In response to these trends, global health authorities and professional organizations began to publish data integrity guidance documents in 2015. 5 , 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 These documents emphasize key principles related to robust data integrity, e.g., data life-cycle management, data governance, and “ALCOA+”* principles.

It is important to understand that preventing deliberate falsification is only one aspect of data integrity risk management—albeit a significant one. Human error and other challenges, which we will discuss later in this article, are also contributors.

Terminology

Inconsistent definitions are another factor. When comparing global regulatory guidance about data integrity, it becomes clear that terminology is not always used in a consistent way. Industry organizations have helped provide greater clarity on expectations and “how to,” but inconsistencies in interpretations of the term “data integrity” remain. An analysis of “data integrity” definitions in guidance documents compared to those published in international standards for electronic records found that in most of the guidance documents the term is used primarily to mean “data reliability” or “data quality.”17 The recently published GAMP® Guide on Records and Data Integrity provides a framework for consistent terminology and key concept definitions.16

* ALCOA is a framework of data standards designed to ensure integrity: attributable, legible, contemporaneous, original, and accurate. ALOCA+ also includes complete, consistent, enduring, and available.

- 2

- 3

- 4

- 5UK Medicines and Healthcare Products Regulatory Agency. “‘GXP’ Data Integrity Guidance and Definitions.” Revision 1. March 2018. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/fi le/687246/MHRA_GxP_data_integrity_guide_March_edited_Final.pdf

- 6US Food and Drug Administration. “Data Integrity and Compliance with cGMP.” Guidance for Industry. Draft Guidance. April 2016. https://www.fda.gov/ucm/groups/fdagov-public/@fdagov-drugs-gen/documents/document/ucm495891.pdf

- 7World Health Organization. Annex 5: Guidance on Good Data and Record Management Practices. WHO Technical Report Series, No. 996, 2016. http://www.who.int/medicines/publications/pharmprep/WHO_TRS_996_annex05.pdf

- 8PIC/S. “Good Practices for Data Management and Integrity in Regulated GMP/GDP Environments.”Draft 3, 30 November 2018” https://picscheme.org/en/news?itemid=33

- 9European Medicines Agency. Guidance on Good Manufacturing Practice and Good Distribution Practice: Questions and Answers. “Data Integrity (August 2016).” https://www.ema.europa.eu/human-regulatory/research-development/compliance/good-manufacturing-practice/guidance-good-manufacturing-practice-good-distribution-practice-questions-answers#-data-integrity-(new-august-2016)-section

- 10Parenteral Drug Association. Points to Consider. “Elements of a Code of Conduct for Data Integrity in the Pharmaceutical Industry.” https://www.pda.org/scientific-and-regulatory-affairs/regulatory-resources/code-of-conduct/elements-of-a-code-of-conduct-for-data-integrity-in-the-pharmaceutical-industry

- 11China State Food and Drug Administration. “Drug Data Management Standard.” Translated from the Chinese by the China Working Group of Rx-360. https://ungerconsulting.net/wp-content/uploads/2016/10/SFDA-Drug-Data-Management-Standard.pdf

- 12Schmitt, S., ed. Assuring Data Integrity for Life Sciences. River Grove, IL: Parenteral Drug Association, DHI Publishing, LLC, 2017.

- 13Australian Therapeutic Goods Administration. “Data Management and Data Integrity (DMDI).” April 2017. https://www.tga.gov.au/data-management-and-data-integrity-dmdi

- 14International Society for Pharmaceutical Engineering. GAMP® Guide: Records and Data Integrity. March 2017.

- 15Parenteral Drug Association. Technical Report Number 80. “Data Integrity Management System for Pharmaceutical Laboratories.” 2018.

- 16 a b International Society for Pharmaceutical Engineering. GAMP® Good Practice Guide: Data Integrity—Key Concepts. October 2018.

- 17López, Orlando. “Comparison of Health Authorities Data Integrity Expectations.” Presented at the 4th Annual Data Integrity Validation Conference, 15–16 August 2018, Boston.

We believe a holistic approach to data governance that includes people, processes, and technology will provide the road map to sustainable data integrity

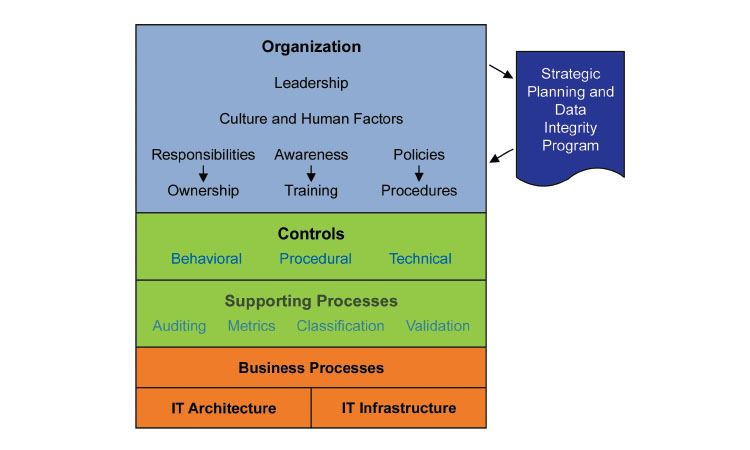

Leadership

Health authorities agree on management’s responsibility to assure that the pharmaceutical quality system (PQS) governing the GMP environment provides effective data integrity risk management. Figure 1 illustrates the key elements for effective GMP data governance.14 This holistic approach includes the organizational components of leadership and culture; controls that encompass people, process, and technology; and supporting processes such as metrics, IT architecture, and infrastructure. The purpose of such an approach is to assure that product manufacturing and testing can be reliably reconstructed from the records. Achieving reliable ALCOA+ data attributes can be considered an output of a robust data governance system such as this.

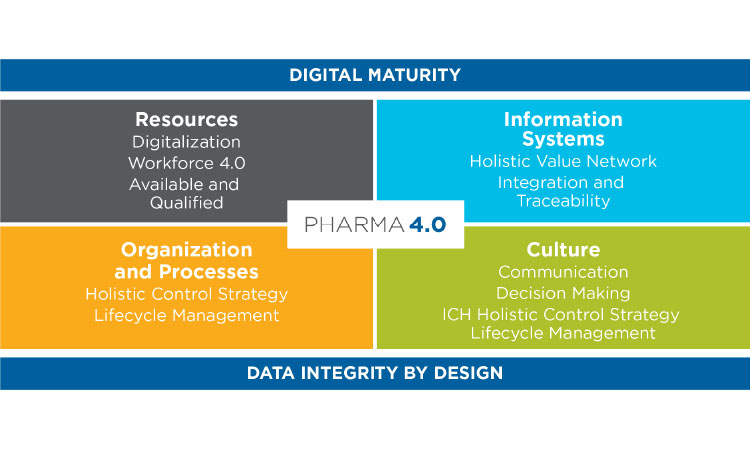

Pharma 4.0

The transition currently underway to adopt Pharma 4.0 principles and practices emphasizes digitalization of pharmaceutical manufacturing and supply chain operations in alignment with the PQS.18 Figure 2 illustrates the four quadrants of the Pharma 4.0 operating model and its key enablers: digital maturity and data integrity by design. Although an in-depth examination of these enablers is beyond the scope of this article, we will explore aspects of how the transition to Pharma 4.0 may affect the role of the quality control laboratory analyst and the quality control laboratory of the future.

Data Integrity Programs

In response to the increased regulatory focus on data integrity, many companies have established data integrity programs. During our preparation of this article in late Q3 2018, we interviewed 11 global pharmaceutical leaders responsible for such programs. Their companies represented top 20 pharmaceutical companies, one generic company, and one API supplier. We wanted to gain a broader view of the challenges and learnings resulting from these efforts, as well as the leaders’ perspectives on the 3-to-5-year horizon for these data integrity programs.

Several themes emerged regarding data integrity program scope:

- Most companies focused initially on quality control laboratory systems and operations, then began to transition efforts to manufacturing operations, third-party CMOs, vendors, and other areas. In most cases, the corrective and preventive actions (CAPAs) generated relative to quality control laboratories were still in progress.

- Most programs emphasized technology enhancements to connect and integrate laboratory equipment and systems to achieve full compliance and to minimize human error.

- Although increasing data integrity training was a common aspect of program scope, fewer companies reported a focus on building cultural excellence as part of sustaining data integrity enhancements.

- Data integrity maturity in the group of companies interviewed ranged from those struggling with limited program scope to a fully mature remediation program that has since transitioned to integrated permanent roles and practices embedded with the PQS.

- Most of the companies are in the trenches at different stages of detecting and remediating gaps. They expect this to continue into 2019 and beyond.

Common challenges included:

- Laboratory informatics products and tools meet system compliance requirements (e.g., 21 CFR Part 11, EU Annex 11) but fall short when it comes to meeting data integrity operational needs. Needed tools include 1) standard reports and queries for effective and efficient reviews of data audit trails and 2) retiring spreadsheet calculations and manual transcriptions of results in favor of (master data) database configurations.

- Balancing subject matter expert resources between daily business, data integrity enhancements, and other urgent priorities. One company, for example, shifted its focus to cybersecurity, which they perceived as a greater risk.

- Capital and labor costs for new equipment and systems and long timelines for implementation and integration.

We also noted that some companies joined with others to share learnings in informal forums. Key topics included audit trail review practices and health authority expectations during inspections.

The challenges described by the companies we interviewed indicated that most were in the trenches—i.e., focused on data integrity program CAPAs to strengthen systems and processes. Most have not yet envisioned sustaining data integrity in a future quality control laboratory beyond the landscape of integrated systems.

Because the quality control laboratory and quality control laboratory analyst role remain primary focus areas for regulatory inspections, data integrity observations, and remediation efforts, the next sections focus on the role of the quality control laboratory analyst and the data integrity challenges and risks they face every day.

Quality Control Laboratory Analyst

While quality control laboratories in the regulated industries operate reasonably smoothly, they can still face data integrity issues. For purposes of illustration, we have created a fictional analyst named Fabian, whose daily quality control laboratory workflow is detailed, tedious, and sometimes dependent on factors that are hard to control (missing samples or reagents, equipment failures and outdated analytical test methods). Although we will focus on a day in the life of this analyst, the information presented is relevant to other roles and functions as well, including R&D laboratory scientists, lab supervisors, and manufacturing. The pressures described in this section can occur in any laboratory over time.

Figure 3 shows the long list of complex tasks that are typically part of Fabian’s day. He must complete large volumes of laboratory paper and/or electronic documentation for each sample tested. Like most quality control laboratories, Fabian’s productivity is measured (samples/ tests completed per day) and monitored. His work is reviewed for accuracy and correctness. Documentation gaps and laboratory errors result in time-consuming investigations and CAPAs. Controls added to monitor, detect and lower data integrity risks have increased the workload of Fabian and his colleagues in the past several years. Despite this, at the end of the day, Fabian sometimes feels that his completed work is not fully trusted by his peers, company or organization leaders, auditors, or regulators.

Pressures and Challenges

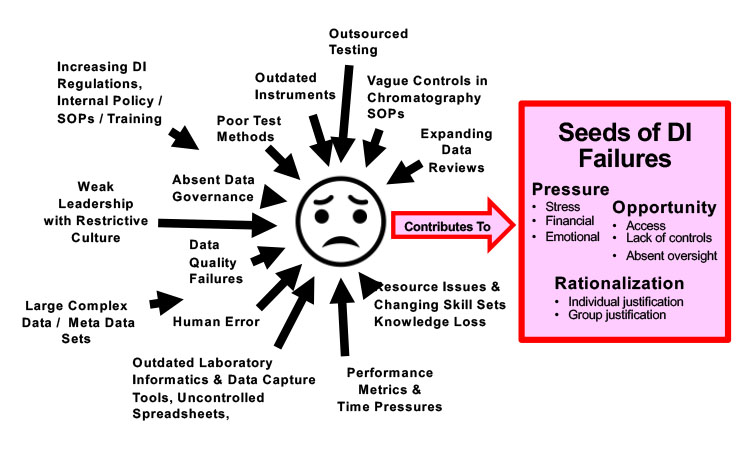

Being a quality control laboratory analyst is tough and tedious! Pressures come in many forms. Some are obvious; others are subtler but equally significant. This pressure can increase the risk to data integrity by planting seeds for data integrity failures and fraud, especially when combined with opportunity (to adjust/modify data) and rationalization (justifying fraudulent actions). Figure 4 illustrates a few of the pressures that today’s GMP laboratory analyst (and laboratory leaders) often encounter.

Poor and Outdated Test Methods

Quality Control laboratories sometimes use outdated test methods (i.e., analytical, micro, environmental monitoring, sterility, container closure, immunology) typically found with mature products. The cost and time required to update these methods—including associated change controls and multi-country registration filings—can put the brakes on needed changes in some laboratories.19 Mergers, acquisitions, and outsourced manufacturing and testing can create virtual and fragmented supply models and take the focus off updating old analytical methods. Another problem is test methods whose performance relies on undocumented “tribal knowledge.” Analyst turnover and the use of temporary analysts in response to mergers, acquisitions, and cost reductions can accelerate the loss of this tribal knowledge and affect test method execution. All of these situations can lead to data integrity issues.

Quality Control laboratory analysts can find themselves in no-win situations when they are required to use outdated test methods that generate errors and/or data quality issues. These pressures can lead to atypical behaviors, such as channeling testing to just one or two analysts, and increasing the risks of data fraud and data integrity breaches.

Figure 3: Quality Control laboratory analyst daily tasks

1. Receive sample from production

2. Record sample chain of custody in paper logbook and spreadsheet/system

3. Transfer and register samples in LIMS

4. Prepare sample labels and safety info

5. Transfer samples to testing laboratories

6. Review emails and plan workday

7. Print test documentation/worksheets

8. Collect reference standards; record metadata

9. Collect glassware and consumables

10. Collect/prepare reagents/solutions; verify expiry dates; record metadata

11. Perform daily balance check

12. Prepare equipment and instruments, verify calibration; record metadata

13. Weigh samples and standards

14. Prepare samples (dilutions/filtration); record metadata

15. Run system suitability/calibrations; record metadata

16. Run tests/instrument (overnight); record metadata

17. Attend daily shift huddle

18. Discuss change in sample priority with lab planner; switch to an urgent sample or different methods?

19. Capture and record test results and metadata on paper or system

20. Save all raw data files

21. Perform calculations and capture on paper or use spreadsheet

22. Update test status on wipe board

23. Review data for errors

24. Attend training session due to previous laboratory errors (CAPA)

25. Manually enter data to LIMS/SAP

26. Manually enter data into spreadsheets for trending

27. Review data compared to specs

28. Manually trend data

29. Update sample tracking spreadsheet

30. Review peer’s test results and calculations for errors

31. Request OOS/LI if errors are found

32. Update usage logbooks

33. Save all sample, standard, and reagent preparations pending data review

34. Review documentation and audit trails

35. Wait for peer data verification

36. Correct errors found during data review (paper and e-Records)

37. Wait for supervisor data review

38. Correct errors found during supervisor review (paper and e-Records)

39. Receive decision: data valid

40. Clean work area

41. Stage dirty glassware for cleaning

42. Stage sample for waste disposal

43. Update sample chain of custody

44. Respond to any investigations

45. Repeat testing/remeasurement

46. Wait for results of investigation

47. Close records; file all paper data packs and notebooks

- 19Borman, Phil. “Opportunities to Include Analytical Method Lifecycle Concepts in ICHQ2, Q12, & Q14.” Presented at the ISPE 2018 Annual Meeting & Expo, 4–7 November 2018, Philadelphia. https://www.researchgate.net/publication/328838326_Opportunities_to_include_Analytical_Method_Lifecycle_concepts_in_ICHQ2_Q12_Q14

Weak Chromatography Procedures and Controls

While many quality control laboratories rely on chromatography testing as a primary analytical technique, many standard operating procedures (SOPs) and training materials contain vague language that is subject to interpretation. Examples include no controls about disabling audit trails, or the use of manual interventions or integrations; no requirement to process samples in a certain order (system suitability, data acquisition, data analysis); no requirement to include all injections made while testing; and no sample or standard naming conventions.

Vague chromatography SOP controls can yield data integrity issues and place further pressures on the quality control laboratory analyst, a concern discussed in depth by Newton and McDowall: “Management is responsible for creating a robust chromatographic procedure, and foundational policies that accompany it. These must be incorporated into training that is received by every analyst to assure consistency in practice.”20

Work Volume, Performance Metrics, and Time Pressures

While work volume for the quality control laboratory analyst typically fluctuates, both high- and low-volume workloads can be stressful. Analyst performance is typically measured in several ways, including: performance (e.g., samples and/or tests per unit of time, roles completed per unit of time, batches tested per analyst, percentage of samples completed on time vs. demand), and quality, typically measured as right-first-time (RFT) testing. If not managed properly, such metrics can induce behaviors that increase data integrity risks and underreporting of laboratory errors. 21 , 22

Human Error

Although quality control laboratory analysts are human and occasionally make mistakes that can lead to laboratory errors and inaccurate test results, regulators make no distinction between inadvertent human error and intentional fraud, since both have the same effect on data integrity, product quality, and patient safety. 21 Fear of retraining and disciplinary consequences can lead to additional pressures on the laboratory analyst.

Large and Complex Data Sets

Laboratory data complexity has increased significantly over the past few decades, requiring more time and effort to collect, analyze, review, and report. Simple wet chemistry testing and single-point assays have given way to complex high-performance/ultra-performance liquid chromatography analysis, which is sometimes paired with gradient columns, diode array detectors, and mass spectroscopy analysis. Large-molecule/biological products require complex plate-based analysis, biochemical testing, and genomic testing.

The growth of CMOs and contract laboratories, combined with the diversification of laboratory informatics tools, has added complexity to recreating records for a batch of drug product. The digital laboratory record could include elements from multiple suppliers, manufacturing processes, CMOs, and contract and internal laboratories, each with a mixture of instruments, raw data, audit trails, and analyzed and reported results. The expanded size and increased complexity of laboratory raw and processed data records put additional burdens and pressures on the laboratory analyst.

Expanding Data Reviews

Until recently, most quality control SOPs contained few requirements to review laboratory record audit trails. Time, skills, and resources required have increased over the past few years as cGMP data integrity expectations expanded. “Data review” now includes test results, (multiple) audit trails, metadata, calculations, supporting static data (specifications), trends, unreported data, repeat testing, chromatography integration parameters, etc.

The quality control laboratory analyst acting in a peer or dedicated role performs the bulk of these reviews, which add new tasks to the laboratory work-flow. The percentage increase in laboratory analyst work related to expanding data integrity controls, while hard to quantify, is estimated at 10%–15%. Despite this, resources often have not expanded enough to meet the additional data review requirements; this adds more pressure on the quality control laboratory analyst.

Outdated Instruments and Informatics

Cost constraints may lead to chronic underfunding or deferred funding for needed instrumentation and software updates. Outdated instruments are sometimes tied to the outdated test methods discussed previously. Older instruments can fail due to poor service, lack of parts, lack of knowledge for proper operation and/or skills required to x them. Outdated laboratory instruments with dedicated software typically do not have provisions for 21 CFR Part 11 requirements (individual sign on, electronic records, electronic signature requirements, and support for audit trails).

Laboratory informatics tools (software applications) age along with the instruments used to acquire data. Outdated tools such as laboratory information management systems (LIMS), chromatography data systems (CDS), and stand-alone instrument systems have data integrity gaps that may include no or limited audit trails, limited user and data controls, security gaps, absent vendor support, no support for electronic records or electronic signatures, and performance and data loss gaps. These problems are further exacerbated by outdated instruments running old software on older stand-alone PCs that are not connected to a local network for data storage and whose operating systems contain added risks, including local uncontrolled data storage, direct access to modify date and time, unsupported operating systems, and no security updates.

Analysts pressured to “get the work done” even when instrument failures repeatedly disrupt testing schedules may explore workarounds or improvise to complete their work assignments. This may include recording additional information in instrument paper logbooks to make up for the lack of audit trails.

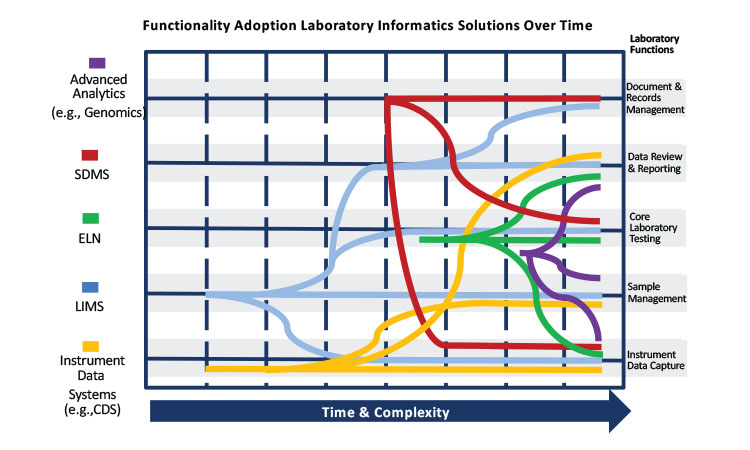

Rapidly Evolving Informatics

The number and scope of quality control laboratory informatics tools available have expanded significantly (Figure 5). Twenty years ago, many laboratories employed basic LIMS and CDS to perform quality control testing as illustrated in the left half of Figure 5. The right side of the figure illustrates the growing set of laboratory informatics tools, each with a broad set of functionalities that can collect, analyze, review, and report test results: robust LIMS and CDS, electronic laboratory notebooks, scientific data management systems, and advanced analytics (e.g., genomic analysis tools and plate-based automation for immunology testing). Each system has its own analytical/electronic/paper record with its own set of audit trails and data life cycles that must be formally qualified (e.g., GAMP validation), documented, analyzed, reviewed, reported, and managed. Quality control laboratory analysts need a broad set of skills to manage test results in multiple laboratory informatics systems.

Data Quality

In a review of recent data quality research across different industries, Redman wrote: “We estimate the cost of bad data to be 15% to 25% of revenue for most companies.”23 Yet while laboratory test result accuracy (one of the As in ALCOA+) is assumed, RFT documentation (RFTDoc) error rates for paper-based laboratory records typically range between 20% and 50%. This may be surprising to those who are unfamiliar with laboratory operations. The documentation corrections generate rework and stress for laboratory analysts.

While many quality control laboratories and the analysts working in them produce quality work, the pressures can elevate data integrity risks and sow potential seeds of failure—or even fraud. Proactive steps are needed to reduce and mitigate these risks.

Trust takes years to build, seconds to break, and forever to repair -Anonymous

Key Enablers

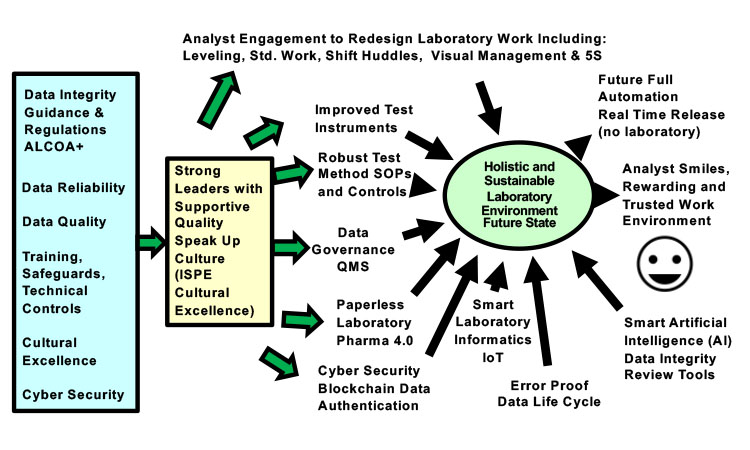

The discussion below lists elements that contribute to a holistic and sustainable future quality control laboratory environment with lower overall data integrity risks (Figure 6).

Establish Cultural Excellence

Engage quality control laboratory analysts and supervisors in designing the future state to promote environments in which analysts feel comfortable sharing errors and quality issues. Encouraging cultural excellence within the laboratory and across the site, including strong leaders with a quality vision, quality mindsets, leading quality metrics, transparent reporting, and Gemba walks that cover both process and the data life cycle (as described in detail in ISPE’s Cultural Excellence Report). 24

Improve Test Methods

Improving or replacing outdated and/or awed quality control test methods is a critical step in providing reliable and trustworthy quality control test results. Asking for analyst input and participation can identify test methods with high failure rates, weak robustness, low productivity, and safety issues. A quality risk management (QRM) approach can screen test methods, develop a business case, and prioritize what to work on first. Test method mapping is used to identify undocumented “tribal knowledge” and improve existing SOPs. New test methods with updated analytics, instrumentation, and informatics are sometimes required. A regulatory assessment is recommended for minor changes such as wording clarification to determine if a regulatory ling is required. Where significant changes are made, a regulatory filing assessment is required.

Update Testing SOPs and Controls

Clear, straightforward definitions, requirements, and controls for quality control testing provide a trusted environment for testing, while robust, objective testing SOPs are essential for mitigating data integrity risks. The Parenteral Drug Association Technical Report No. 80, “Data Integrity Management System for Pharmaceutical Laboratories,” is recent guidance that details common chromatography data processing and peak integration gaps that should be defined and controlled in SOPs. The report also discusses microbiology testing data integrity risks that should be mitigated with strong SOPs, and includes testing for environmental monitoring, sterility, and bacterial endotoxins. 15

- 20Newton, M.E., and R. D. McDowall. “Data Integrity in the GxP Chromatography Laboratory, Part III: Integration and Interpretation of Data.” Chromatography Online 36, no. 5 (May 01, 2018): 330–335.

- 21 a b Wakeham, Charlie, and Thomas Haag. “Throwing People into the Works.” Pharmaceutical Engineering 36 (March–April 2016): 42–46.

- 22Wakeham, Charlie, and Thomas Haag. “Doing the Right Thing.” Pharmaceutical Engineering 36, no. 2 (March–April 2016): 60–64.

- 23Redman, Thomas C. “Seizing Opportunity in Data Quality.” MIT Sloan Management Review: Frontiers Blog, 27 November 2017. https://sloanreview.mit.edu/article/seizing-opportunity-in-data-quality

- 24International Society for Pharmaceutical Engineering. “Cultural Excellence Report.” April 2017.

- 15

Update Test Instruments

Updated laboratory instruments with improved technical controls for user account security, electronic records, and electronic signature requirements are critical for a productive and compliant laboratory. Equally important is full control of instrument parameters— including metadata capture—and control of all records generated throughout its data life cycle. Replacing outdated instruments with modern 21 CFR Part 11–compliant instruments and enabling appropriate GMP configuration lowers overall data integrity risks, reduces laboratory errors, increases reliability, and improves the work environment.

Manage Work Volume

High-or-low-volume work combined with day-to-day variability comprise a big part of the pressures on today’s QC laboratory analyst. Detailed data analysis and leveling solutions combined with internal customer lead-time requirements and standard work roles that are constructed and verified by laboratory analysts can distribute work evenly among analysts and workdays. With QC analyst participation, the result is a fair day’s work for all on a consistent and repeatable basis.

Go Beyond Human Error

An understanding of human behavior as described in James Reason’s book Human Error25 is the first step in moving away from blaming and retraining analysts for laboratory errors. Root causes described as “human error” need to be traced more deeply to fully understand their origins. Examples include failures in systems, processes, and organizations; flawed defenses (faulty temperature sensor in stability chamber) and error precursors (first time performing test upon return from medical leave). Human error programs work best when incorporated into the investigation and CAPA quality systems. Given cognition limitations and published studies on human error rates, 21 an organizational culture that values and practices openness is critical to reach root causes and determine preventive measures. The full use of laboratory informatics to interface instruments and systems will significantly reduce human error (and data integrity risks) related to data entry, transcriptions, and other manual activities.

Expand Data Reviews

The level of detail required for comprehensive reviews of QC laboratory data continues to expand, but tools to assist the laboratory analyst with data reviews have been slow to enter the market. Pharmaceutical companies have started to develop their own informatics tools, including simple user interfaces that with a click of a button run queries to look for data integrity issues such as alteration of raw data, repeat testing of the same sample, incomplete or missing records, substitution of test results, use of manual integration or reintegration/reprocessing of chromatography peaks, substitution of calibration curves, trial injections, non-contemporaneous dating (backdating, predating), and data deletions. A formal definition of what is in the laboratory record (including metadata and audit trails), combined with clear definitions of which elements are included in the review process, is a best practice. Review-by-exception approaches continue to be employed on a limited basis for low-risk test results.

Update Laboratory Informatics

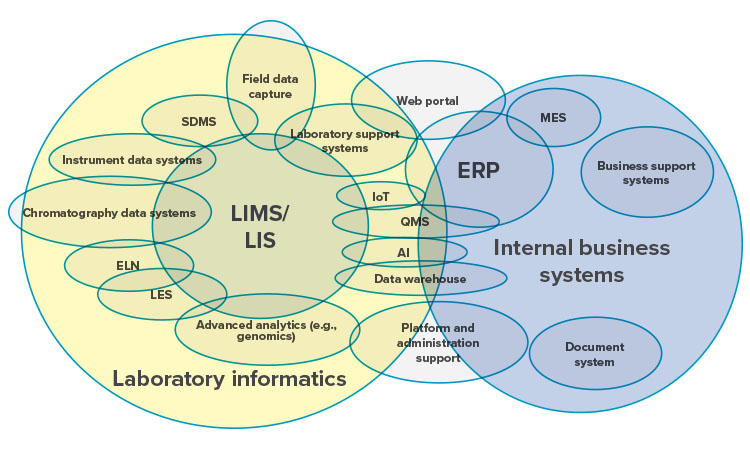

Laboratory informatics have become an important element in planning, preparing, capturing, analyzing, trending, reporting, and storing laboratory test results. The ASTM E1578 Standard Guide for Laboratory Informatics was updated in 2018 to include the subjects of data integrity, artificial intelligence, blockchain, and cloud-based platform-as-a-service and software-as-a-service tools.

Integrating informatics tools within the laboratory, with other business systems, and in some cases with external partners, has expanded the landscape and complexity of implementing and sustaining these critical tools. The large yellow circle on the left side of Figure 7 shows examples of informatics tools that can be found in today’s laboratories. The blue circle on the right side shows examples of internal business tools that may exchange data with the laboratory informatics tools. The figure as a whole illustrates both the complexity and overlap between laboratory informatics tools, business systems, and external organizations.

Hospital and clinical settings that use laboratory information systems (LIS) are increasingly active in the development, production, and testing of chimeric antigen receptor (CAR) T-cell and gene-based therapies. The intricate, decentralized supply chains needed for these therapies centered on the patient (patient-manufacturer-patient) further increase the complexity of managing and reviewing their digital records. Fortunately, innovation in this space is moving forward rapidly with new processes and evolving laboratory informatics to support clinical investigations. These tools stitch together and help manage the many dispersed elements that encompass the data life cycle for CAR-T or gene-based therapies. 26

Turning on technical controls for existing laboratory informatics solutions (i.e., LIMS/ LIS/CDS) will go a long way in reducing data integrity risks. Laboratory informatics tools provide complete record capture with minimal human data entry, thus lowering the risk of data integrity failures. As they are adopted, laboratory analyst skills required to use these tools for advanced analytic analysis of laboratory data continue to grow. Laboratory informatics vendors are also slowly developing additional technical tools to help laboratories address expanding data integrity needs.

Future Applications

Artificial Intelligence

While artificial Intelligence (AI) is in its infancy within laboratory environments, the idea of using AI to detect and lower data integrity risk is promising. AI learning includes looking at data sets, links between data, search algorithms, and variables to find new insights. AI has the potential to digest laboratory data quickly and make decisions as laboratory transactions are processed. To enable AI benefits for data integrity, AI should be integrated with laboratory informatics applications (i.e., LIMS, CDS, and Internet of Things [IoT] devices).

Laboratory informatics applications are beginning to embrace AI by defining, learning, and utilizing data ontologies (concepts, categories, and the relations between them) to support laboratory functions. Analyses of complex data sets such as genomics utilize AI for searches and pattern recognition. Smart tools with limited AI capabilities can assist with supportive laboratory functions and testing.

Looking to the future, laboratory informatics vendors have an opportunity to incorporate elements of AI to accelerate and improve the expanding data review requirements that support data integrity. Potential examples include: verifying data accuracy, verifying that data capture/entry is contemporaneous, recognizing outliers in a consistent flow of data from sample receipt to sample destruction, reviewing audit trails for data integrity risks, and examining data patterns for copied data, missing data, duplicate testing, manual integration of chromatography data, and test injections for chromatography testing. Future application of AI tools in laboratory environments may help laboratory analysts conduct routine testing, relieve data review workloads, and restore trust in laboratory test results.

Internet of Things

Manual recording or transcription of laboratory data remains a data integrity risk. Current practice for connecting instruments to a laboratory informatics solution is either by direct connection or through middleware. These interfacing methods remain costly and time-consuming. IoT offers a path to greater instrument connectivity with less human interaction and lower data integrity risk. Implementing IoT in the laboratory comes with its own considerations, including communication methods, security, validation, and quality of data.

- 26Weinstein, Aaron, Donald Powers, and Orlando A. Serani. “CAR-T Supply Chain: Challenging, Achievable, Realistic and Transformational.” Presented at the ISPE 2018 Annual Meeting & Expo, 4–7 November 2018.

Source: Reprinted with permission, from ASTM E1578-18 Standard Guide for Laboratory Informatics, Figure 1, copyright ASTM International, 100 Barr Harbor Drive, West Conshohocken, PA 19428. A copy of the complete standard may be obtained from ASTM International, www.astm.org.

Blockchain

Blockchain is an evolving form of data encryption that provides secure identity management and authentication of records. Blockchain is in the early phase of adoption in laboratory and medical data systems, with a focus on completed final reports (i.e., certificates of analysis), instead of individual laboratory transactions. Blockchain technology operates in a decentralized way over multiple computer servers using cryptography, distributed ledgers, smart contracts, hashed IDs, and tokenization to provide a dynamic repository of traceable and secure transactional records. Organizations are beginning to use blockchain to track and trace entire supply chains from raw materials to final products delivered to patients. Laboratory communities are starting to experiment with blockchain to improve data portability, integrity, auditability, and security.

Transaction data stored in a blockchain is almost impossible to change or hack. Data integrity is critical for clinical trials (and all GMP laboratories), and blockchain technology provides trusted records with strong data integrity.27 Blockchain also has the potential to change how data audits (internal and external) will be performed in the future. Another clinical application may allow data records to be shared between collaborating partners across networks. The application of blockchain technology may also help restore confidence in both the work performed and the laboratory analyst.

Analysts pressured to “get the work done” even when instrument failures repeatedly disrupt testing schedules may explore workarounds or improvise to complete their work assignments

Cybersecurity

Cybersecurity risks overlap with data integrity risks, since connected laboratory instruments with global informatics tools and data-rich environments are potential targets for cyberattacks in the laboratory as well as the greater organizational landscape. Our interviews with data integrity leaders confirmed that formal controls must be in place and actively monitored to mitigate cybersecurity risks.

Dozens of rms worldwide were hit in the June 2017 “NotPetya” malware attack, which began as an attack on Ukraine (attributed to Russian actors) and then spread rapidly through multinational corporations (including health care companies) with operations or suppliers in Eastern Europe. Major manufacturing and laboratory operations were disrupted as entire systems, databases, and data files became inaccessible, creating significant disruptions and financial losses. 28

Next Steps

Sustaining data integrity for the long term requires diligence and creativity to keep the principles of ALCOA+ fresh in the fabric of the daily work and to help establish “a way of working” culture that values trust, integrity, and an openness to sharing failures.

The steps required to implement a holistic and sustainable future state laboratory environment that values trust and integrity vary with the organization and laboratory’s maturity level. While a detailed description of the future state implementation methodology is beyond the scope of this article, the high-level steps include:

- Laboratory analyst participation

- Holistic assessment of the current laboratory state

- Mindset and behavior assessment to understand the current organizational culture

- Detailed data life-cycle review

- Technical assessment of critical laboratory processes with high data integrity risks (chromatography, micro, others) and implementation of corrective measures

- Business, QRM, and regulatory assessment and remediation of laboratory test methods and SOPs

- Business, QRM, and regulatory assessment and remediation of laboratory instruments

- Detailed data assessment of laboratory work volume followed by a detailed leveling design supported with visual management tools and metrics

- Iterative implementation of leveling and ow solutions combined with standard work role cards

- Laboratory informatics and data governance assessment, followed by future-state design using paperless laboratory and instrument integration, plus GAMP methodology for data governance and validation

- Adoption of advanced IT technologies (where appropriate) including blockchain and AI to help restore trust in laboratory data.

- Human-error training supporting laboratory investigations, CAPAs, and laboratory workow design

- Development and implementation of cultural excellence—including process and data life cycle Gemba walks—and smart metrics to stimulate human behavioral changes and sustain data integrity.

Conclusions

Corporate data integrity programs remain in the trenches after more than three years of assessment and remediation efforts. These programs have produced many assessments and remediation deliverables, but they have not necessarily yet achieved the cultural excellence needed to sustain data integrity. Although many companies have included a focus on people in their data integrity programs, major activities in this area have been conducting awareness and SOP training. Only a few have reported including a focus on developing, monitoring, and improving organizational culture as a key element in their transition to holistic, ongoing data governance.

Some companies are struggling to sustain data integrity and are looking at alternate approaches. Continued focus on strengthening culture, improving the integration of data governance within the PQS, and embracing the Pharma 4.0 enablers of data integrity by design and digital maturity will be essential to creating the quality control laboratory of the future. Behavioral change-management campaigns that strengthen culture via tools such as Gemba walks and data integrity process-risk analyses were rarely mentioned by the companies we interviewed.

The challenges and pressures faced by quality control laboratory analysts to meet data management expectations contribute difficulties in managing data integrity risks as well as operational efficiency and effectiveness. We noted several key themes, including outdated tests methods and instruments; increasing work complexity and volume, especially for data reviews; performance metrics and time pressures; human error; data quality; large and complex data sets; and outdated informatics tools.

Over the past 15 years, laboratory informatics vendors have gradually improved their products to comply with 21 CFR Part 11 requirements. Current product offerings, however, fall short in some areas of what quality control laboratories need to support the new operational focus on data integrity. Several companies have taken on internal IT projects to develop data review tools that support the quality control laboratory. The adoption of smart analytics and AI tools in the quality control workflow offers the potential to transform the data life cycle from capture to review, speeding overall data analysis and detection of data integrity issues. Updated laboratory informatics, AI, and blockchain tools also carry the potential to reinject trust into laboratory test results and the work of the laboratory analyst. Renewed efforts between laboratory informatics vendors, regulators, and the pharmaceutical industry are needed to address these critical business needs.

In this article we focused on the role of the quality control laboratory analyst in recognition of the critical role the analyst has in assuring product quality and patient safety. The connection between a corporate culture of data integrity and the resulting benefits to product quality and patient safety cannot be overlooked. Additional work is needed to create laboratory environments that restore trust in analysts and their test results as well as customers’ faith in safe and readily available products. This requires engaged and supportive leadership that will collaborate with quality control laboratory analysts to implement the best practices and minimize the pressures discussed.

The quality control laboratory analyst needs our help to create a new laboratory environment that delivers consistent high-quality test results that are trusted and valued by our customers.